How to Select the AI Methodology (Fine Tuning vs Agentic vs RAG)

A guide to choosing the right AI methodology by comparing Fine Tuning, Agentic approaches, and Retrieval-Augmented Generation (RAG).

Selecting the right strategy for integrating AI into your software can be challenging. Rather than adopting a one-size-fits-all solution, it's important to evaluate your task requirements and choose the methodology that best suits your needs: Fine Tuning, Agentic approaches, or Retrieval-Augmented Generation (RAG).

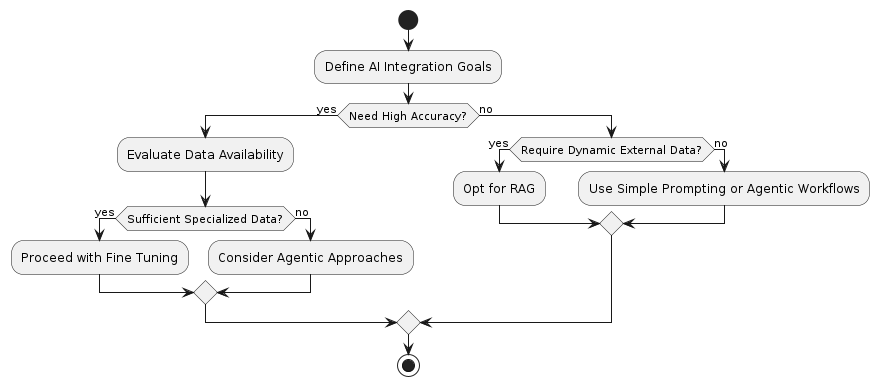

Decision Tree

Below is a quick overview of each approach:

Fine Tuning

Description: Retrain a pre-trained model with specialized, domain-specific data.

Use Cases: High-accuracy tasks, critical applications, and scenarios demanding a unique style.

- Pros:

- Superior precision and consistent performance

- Works well with small datasets (e.g., 100 or fewer examples)

- LoRA adapters enable fine tuning on smaller models (typically 13B parameters or fewer)

- Open-weight model support ensures exportability and avoids vendor lock-in

Cons: Involves extra steps: data collection, hyperparameter tuning, and more complex deployment and resource management.

Agentic Approaches

Description: Leverage autonomous AI agents to handle adaptive, multi-step reasoning.

Use Cases: Exploratory or creative tasks, and environments requiring dynamic decision-making.

Pros: Increased flexibility and reduced setup complexity compared to full fine tuning.

Cons: May produce less predictable outcomes if their decision frameworks are not carefully managed.

RAG (Retrieval-Augmented Generation)

Description: Combine a language model with external data retrieval systems to enrich responses.

Use Cases: Applications relying on up-to-date or extensive external knowledge bases.

Pros: Simplifies incorporation of dynamic external information without heavy retraining.

Cons: Potential latency due to real-time data fetching.

1. Fine Tuning

Fine tuning adapts a pre-trained language model with data specific to your application. This method is ideal when:

- Precision is Critical: For example, a customer service chatbot may need to call the correct API reliably. Even if prompting manages 95% accuracy, fine tuning can push it to 99% for mission-critical tasks like handling transactions or refunds.

- Custom Communication Style: If your application must emulate a unique, personal tone or idiosyncratic language that’s difficult to specify in a prompt, fine tuning can capture these subtleties.

- Stable Query Environments: Tasks with predictable interactions benefit from the tailored behavior achieved through fine tuning.

Additional Considerations:

- Data Requirements: Thanks to techniques like LoRA which modifies only a limited set of parameters effective fine tuning can require as few as 100 examples.

- Open-Weight Models: Many providers now support fine tuning on open-weight models, allowing you to download and export the fine-tuned weights. This ensures portability and reduces vendor lock-in compared to tuning closed models.

- Implementation Complexity: Although fine tuning can significantly boost performance for critical applications, it requires extra steps in data collection, tuning provider selection, hyperparameter management, and compute resource allocation.

2. Agentic Approaches

Agentic approaches use autonomous AI agents designed to manage dynamic, multi-step reasoning. This method works best when:

- Flexibility is Needed: Ideal for creative problem solving or exploratory applications where task parameters may shift over time.

- Adaptive Decision Making: Autonomous agents can adjust to varying inputs in real-time, making them valuable for tasks that are not strictly defined.

- Reduced Setup Complexity: Unlike fine tuning, agentic workflows typically bypass the need for collecting large amounts of specialized training data.

Keep in mind that while agentic systems offer greater flexibility, they can sometimes produce unpredictable behaviors if their decision frameworks are not carefully managed.

3. Retrieval-Augmented Generation (RAG)

RAG merges the capabilities of large language models with external data retrieval, enabling the model to produce responses informed by real-time or enriched external data. This approach is particularly suited for:

- Dynamic Knowledge Integration: When your application needs access to continually updated or extensive external knowledge bases.

- Simpler Data Management: Instead of embedding all information inside the model, RAG retrieves data on demand reducing the need for comprehensive retraining.

- Effective Contextual Responses: By combining retrieval with generation, this method often produces more accurate and context-aware outputs.

The main trade-off with RAG is the potential latency from real-time data fetching.

Choosing the Right Path

When deciding which methodology to adopt, consider these factors:

- Task Criticality: If your application cannot tolerate errors and demands high precision, fine tuning might be your best route.

- Flexibility vs. Stability: For dynamic tasks requiring real-time decision making, agentic approaches offer the necessary adaptability. For more static requirements, fine tuning may be preferable.

- Data Dynamics: If incorporating up-to-date or extensive external information is critical, RAG provides a streamlined solution without the overhead of retraining large models.

It’s also worth noting that for many teams roughly 75% simpler techniques like prompt engineering or agentic workflows might yield sufficient performance. Fine tuning is typically reserved for the critical 25% of cases where alternative techniques fall short.

Remember, many applications might perform well with simpler prompting methods or hybrid solutions. Carefully evaluate your system requirements, performance criteria, and resource constraints to choose the most fitting AI methodology.

*This is based on my experience on applying AI on production software applications and also noted by the staff at DeepLearningAI.