Fine-tuning LLaMA 3 with LoRA Adapters

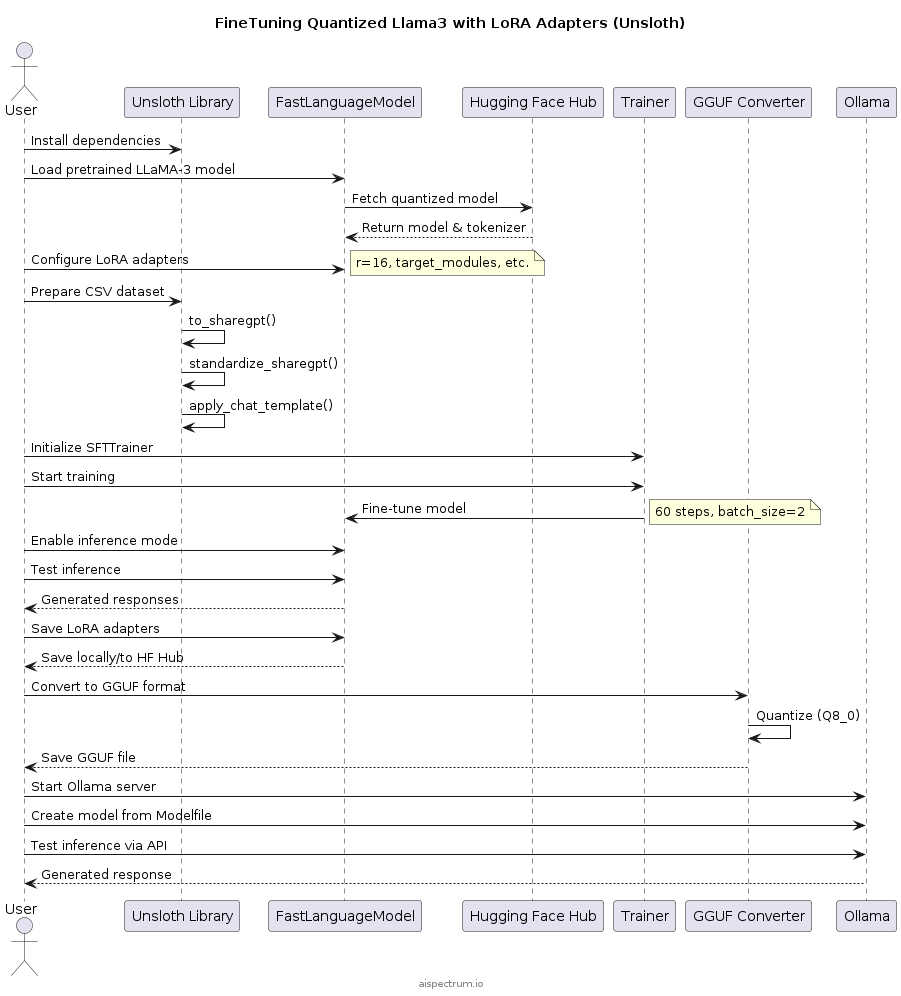

End-to-end workflow for fine-tuning LLaMA 3 models (8B parameters in model - model size ~5GB) with Unsloth, from dataset preparation to GGUF export and Ollama deployment

This guide demonstrates a complete workflow for fine-tuning Meta's LLaMA 3 (8B) model using Unsloth's optimization techniques. We'll walk through the entire process: from setting up a quantized base model, configuring LoRA adapters for efficient training, preparing custom datasets, executing the fine-tuning process, and finally exporting to GGUF format for deployment with Ollama. The approach enables fine-tuning on consumer-grade hardware (single T4 GPU) in just 10 minutes while maintaining model quality through 4-bit quantization and memory-efficient training techniques.

Hardware & Training Specifications

| Component | Specification |

|---|---|

| GPU | Tesla T4 |

| GPU Memory | 14.741 GB |

| Reserved Memory | 5.496 GB |

| Training Time | ~10 minutes |

| Dataset Size | 100K samples |

| Base Model | unsloth/llama-3-8b-bnb-4bit |

| LoRA Rank | 16 |

| Batch Size | 2 |

| Gradient Accumulation | 4 |

Workflow Overview

-

Setup & Installation

- Install Unsloth dependencies

- Load pretrained LLaMA-3 model from Hugging Face Hub

-

Model Configuration

- Configure LoRA adapters (rank=16, target modules)

- Prepare dataset for fine-tuning

-

Dataset Preparation

dataset = unsloth.to_sharegpt(csv_data)

dataset = unsloth.standardize_sharegpt(dataset)

dataset = unsloth.apply_chat_template(dataset, tokenizer)

-

Training

- Initialize SFTTrainer

- Execute fine-tuning (60 steps)

- Monitor training metrics

-

Testing & Deployment

- Test inference with fine-tuned model

- Save LoRA adapters locally/to HF Hub

- Convert to GGUF format

- Deploy to Ollama

Training Performance

Memory Usage

- Peak reserved memory: 6.232 GB

- Peak training memory: 0.736 GB

- Memory utilization: 42.277% of max

- Training memory: 4.993% of max

Training Time

- Total time: 559.7778 seconds (9.33 minutes)

- Using 4-bit quantization for efficiency

- Unsloth gradient checkpointing (30% less memory)

- 8-bit AdamW optimizer

- Linear learning rate schedule (5-step warmup)

- 60 total training steps

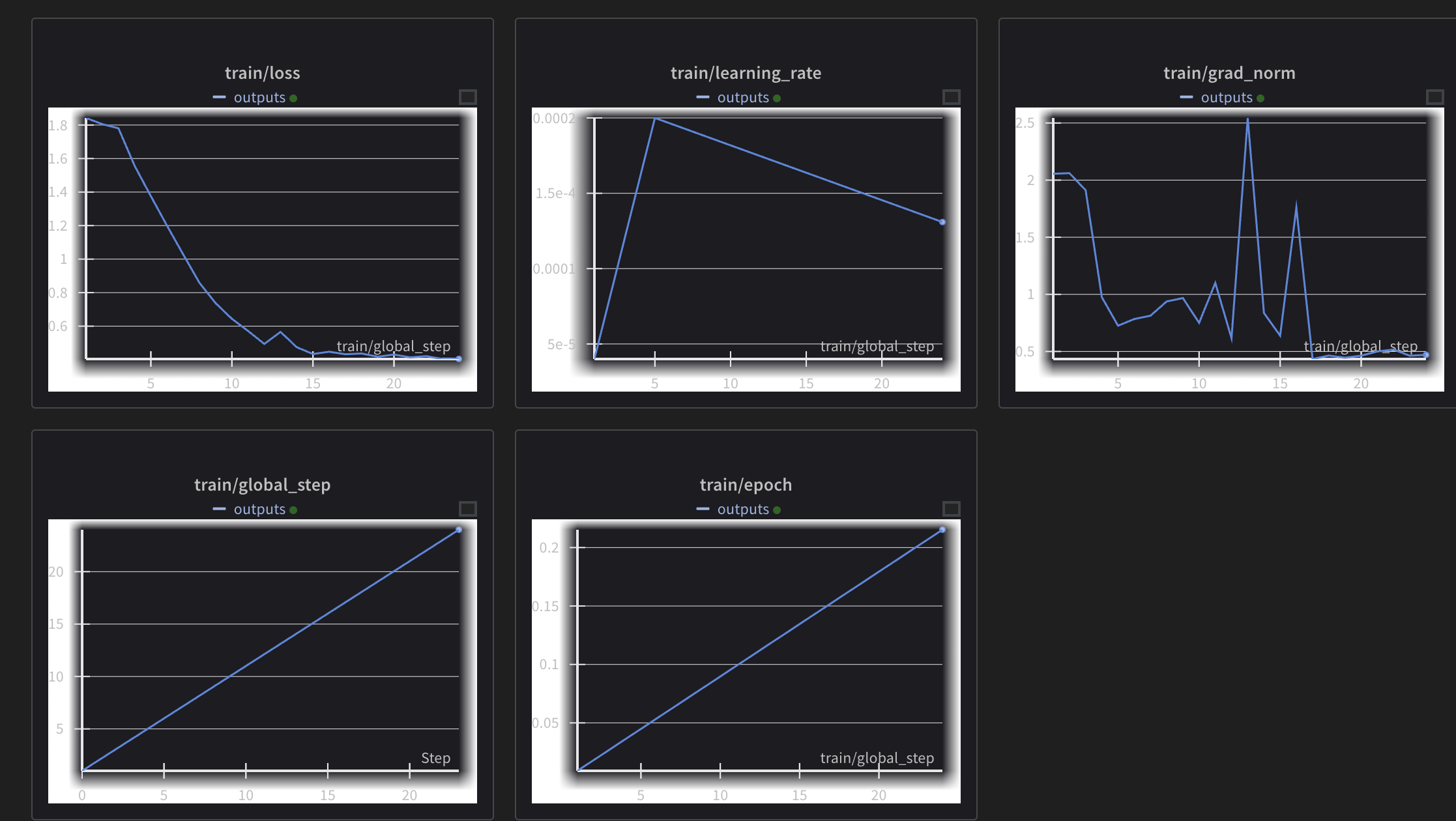

Training Metrics Analysis

The training graphs show healthy progression:

- Loss (top left): Decreasing steadily from ~1.8 to ~0.4

- Learning rate (top middle): Typical warmup and decay pattern

- Gradient norm (top right): Bounded fluctuation

- Global step & Epoch (bottom): Linear progression

Training indicators:

- Good convergence

- Stable optimization

- No exploding gradients

Model Exports

1. LoRA Adapter Files

After training, the adapter files are saved:

lora_model/

├── adapter_config.json # Training configuration

├── adapter_model.safetensors # LoRA weights

└── tokenizer files # Configuration & vocabulary

You can optionally push these to Hugging Face Hub:

model.save_pretrained("lora_model")

tokenizer.save_pretrained("lora_model")

# model.push_to_hub("your_name/lora_model", token="...")

2. GGUF Export for Local Deployment

Export to GGUF format for Ollama/llama.cpp:

model.save_pretrained_gguf("model", tokenizer)

This creates:

- unsloth.Q8_0.gguf (8.53GB) - Complete model in 8-bit precision / Combines base model + fine-tuning / 8-bit quantized for efficient inference

- Modelfile - Ollama configuration file

- Automatically handles BOS token removal in chat template

- Ready for Ollama/llama.cpp deployment

Export stats:

- Writing speed: 45.2MB/s

- Total size: 8.53GB

- Format: Q8_0 quantization

Base Model Details

The base model (unsloth/llama-3-8b-bnb-4bit) is:

- Meta's foundation 8B parameter model

- Quantized to 4-bit by Unsloth

- Knowledge cutoff: March 2023

- MMLU performance: 66.6% (5-shot)

- Uses Grouped-Query Attention (GQA)

- Trained on 15T+ tokens

Running the Model

Use prompts similar to your fine-tuning dataset for best results. Unsloth's optimizations make inference 2x faster than standard implementations.

ollama run llama3-job-classifier3 "What is the O*NET code for: Electricians"