Understanding MCP: Connecting AI to Tools and Data

Learn about the Model Context Protocol (MCP), how it standardizes AI tool use compared to older methods, and how to integrate it.

The Model Context Protocol (MCP) is emerging as a key standard for enabling Artificial Intelligence (AI) models, especially Large Language Models (LLMs), to interact securely and effectively with external tools and data sources. This post breaks down what MCP is, why it's an important step forward, and how developers can leverage it.

a) What is MCP? Why do we need MCP?

Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect devices to various peripherals, MCP offers a standardized protocol for connecting AI models to different data sources, APIs, and tools.

But wait, didn't LLMs use tools before MCP?

Yes, absolutely! The idea of LLMs using tools isn't new. Features like OpenAI's "Function Calling" allowed this. So, how did it work before MCP, and why is MCP an improvement?

Before MCP (The "Smart Intern" Way):

Imagine the LLM is like a very smart intern who understands requests but can't leave their desk to do things:

- You (the Developer's App) tell the intern what tasks you can do: "I can check the weather if you give me a city."

- User asks the intern: "What's the weather in Paris?"

- Intern realizes it needs help: It knows it can't check the weather itself.

- Intern tells YOUR APP what to do: It responds to your app (not the user yet) saying, "Please use the 'check weather' task for 'Paris'."

- YOUR APP does the work: Your application code takes that instruction, calls a weather API, and gets the result "18°C and sunny."

- YOUR APP tells the intern the result: Your code reports back, "The weather check returned '18°C and sunny'."

- Intern finally answers the user: Using the result you provided, the intern says, "The current weather in Paris is 18°C and sunny."

In this older model, the LLM was smart enough to figure out which tool to use and what information was needed, but your main application code was responsible for actually running the tool. This worked, but every app had to build its own logic for every tool, and it was often tied to a specific LLM provider's way of doing things.

Why MCP is an Improvement:

MCP takes this concept and makes it better by providing:

- Standardization (Protocol): A common language (like USB-C's plug shape and signals) for how the AI application (Client) asks a tool (Server) to do something and gets the result back. It's not tied to one specific LLM provider.

- Standardization (Architecture): It clearly separates the AI application (Client) from the tool implementation (Server). The Server is a dedicated program just for running that specific tool. Your main app doesn't need to contain the tool's code itself.

- Decoupling & Reusability: Because servers are separate, they can be reused by many different AI applications (Clients). You can easily swap servers or clients.

- Open Ecosystem: Encourages anyone to build servers or clients that work together, creating a wider range of available tools.

So, while the idea isn't new, MCP provides a new, open standard for the protocol and promotes a standardized, decoupled architecture, making AI tool integration more robust, flexible, and scalable.

b) Servers and Clients

MCP uses a client-server architecture:

- MCP Host: An application (like an IDE, AI Chatbot, or your custom app) that wants to give its AI capabilities access to external tools/data.

- MCP Client: Lives within the Host. It speaks the MCP protocol to communicate with servers.

- MCP Server: A lightweight, separate program exposing specific capabilities (tools/resources) related to a particular data source or API (e.g., a database, file system, web service). It contains the actual logic to perform the tool's action.

MCP Servers

Description: Standalone programs that expose specific tools or data sources via the MCP protocol. They contain the code to perform actions (e.g., query a database, call an API).

- Examples:

- Filesystem Server: Allows an AI (via a client) to securely read, write, or list files on a system.

- GitHub Server: Provides tools for interacting with GitHub repositories (reading files, searching code, etc.).

- Zapier MCP Server: Acts as a gateway, enabling an AI to interact with thousands of apps connected via Zapier.

MCP Clients / Hosts

Description: Applications that integrate AI models and use the MCP protocol to leverage external tools via servers. They manage connections and mediate communication.

- Examples:

- Claude Desktop App: Allows users to configure and use various MCP servers directly within their chat interface.

- AI-Powered IDEs (e.g., Cursor): Integrate MCP servers to give the AI coding assistant access to the file system, Git, etc.

- Custom AI Applications: Developers build hosts using MCP SDKs (Python, TypeScript, Java, etc.) to connect their AI logic to MCP tools.

c) As a developer, should I create an MCP Server?

Yes, consider creating an MCP Server if:

- You have a unique tool, API, data source, or piece of internal infrastructure that you want to make accessible to AI models in a standardized and reusable way.

- You want others to easily integrate your service into their AI applications (contribute to the ecosystem).

- You want to provide a secure, controlled, and separate interface for AI to interact with your resources.

SDKs in various languages (Python, TypeScript, Go, Java, C#, Rust) make building servers significantly easier.

d) As a developer, should I create an MCP Client?

Yes, consider creating an MCP Client (or integrating one into your Host application) if:

- You are building an AI application (chatbot, agent, workflow tool) that needs to leverage external tools or data.

- You want the flexibility to easily add or swap different tools (MCP servers) without major code changes in your main application.

- You want to utilize the existing ecosystem of pre-built MCP servers instead of building custom integrations for each tool from scratch.

You can build a client from scratch using SDKs or integrate with existing host applications (like Claude Desktop) that already support MCP.

e) As a developer, how can I use MCP in my AI Apps (with LangChain / AutoGen / LlamaIndex)?

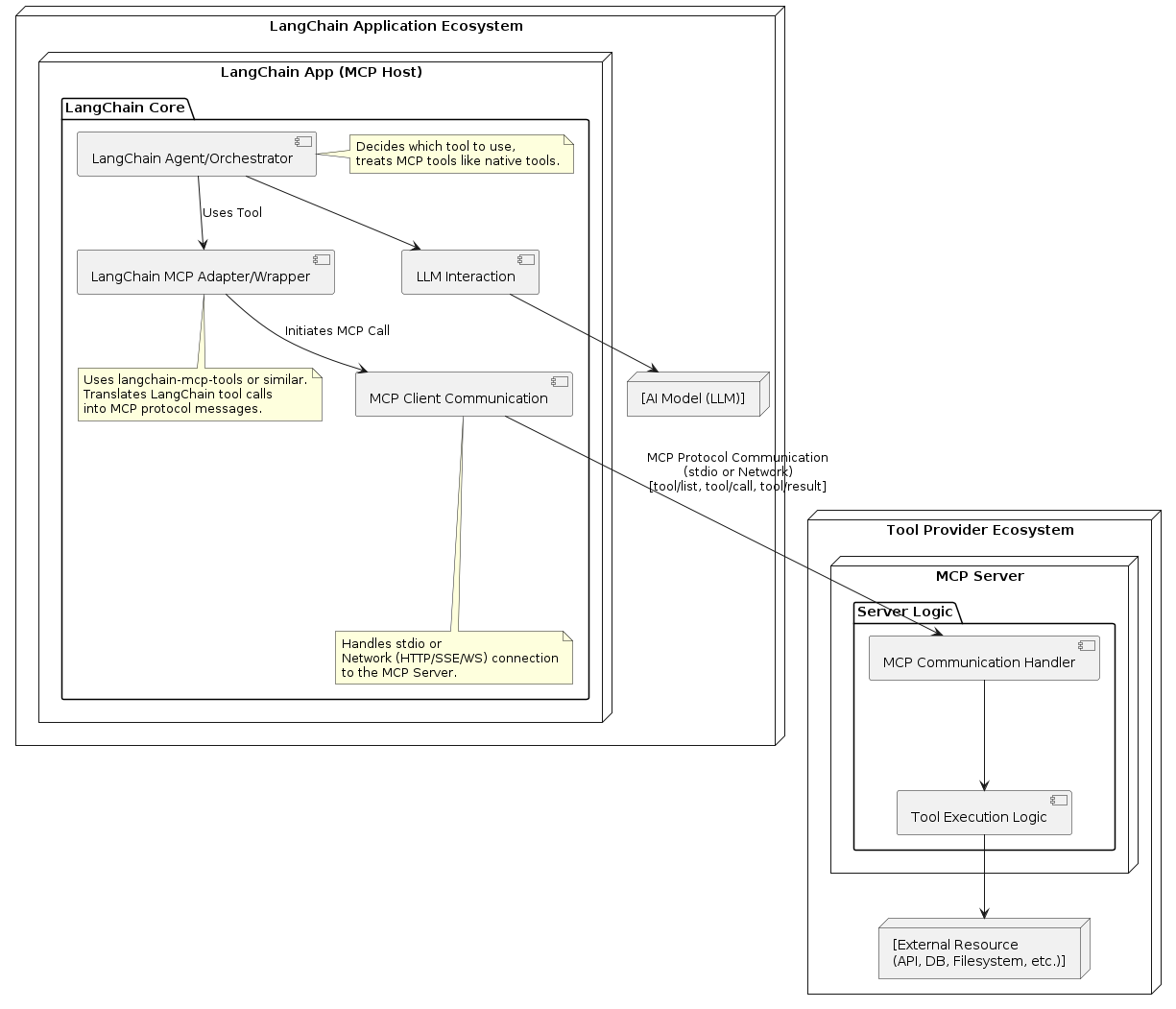

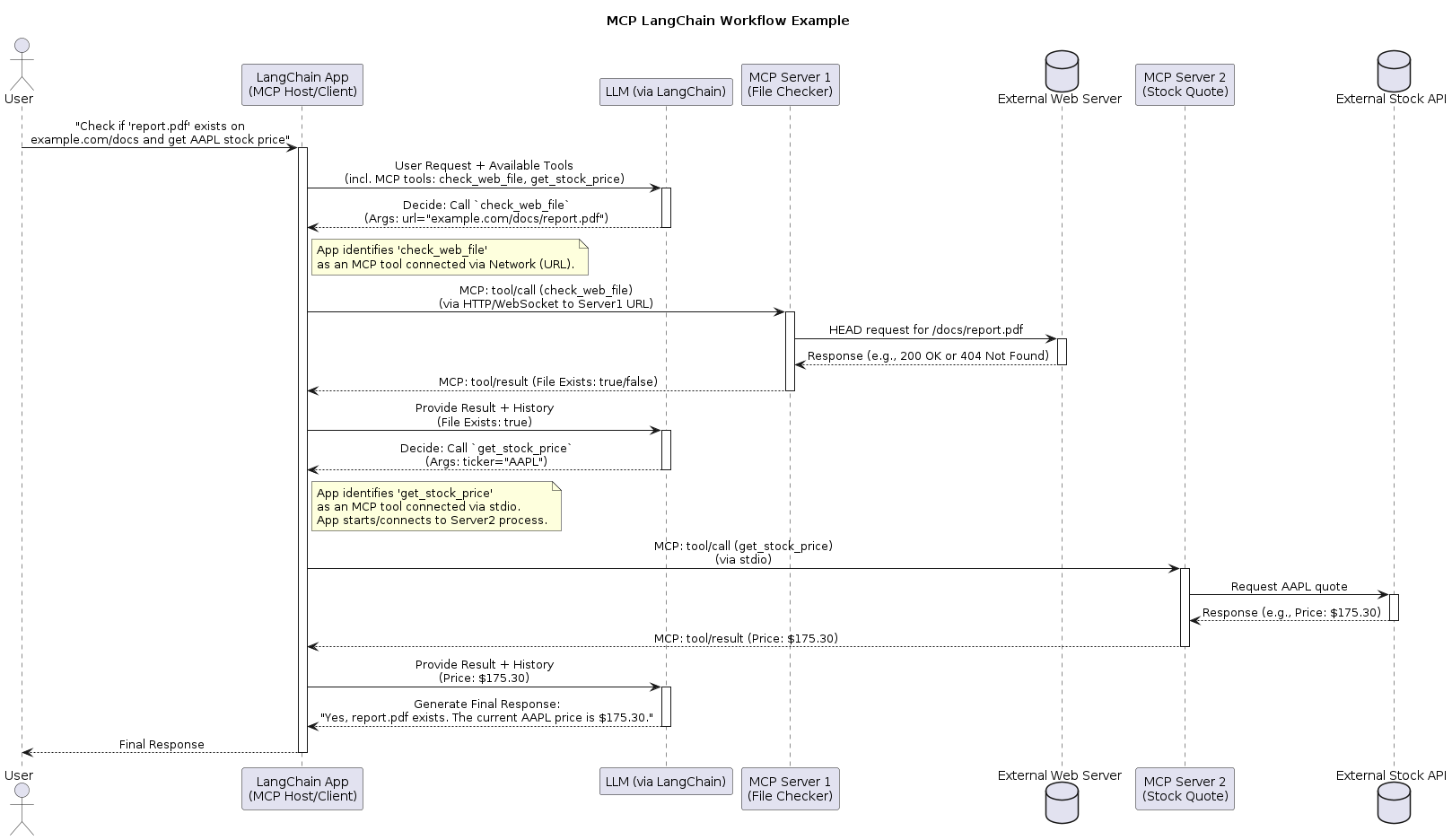

Integrating MCP with popular AI frameworks like LangChain, AutoGen, or LlamaIndex involves bridging the framework's tool/function-calling mechanism with MCP:

- Treat MCP Tools as Framework Tools: You can often wrap an MCP tool call within the structure expected by the framework (e.g., a LangChain

Toolobject or an AutoGen function definition). - Framework as Host: Your application, built with LangChain/AutoGen/LlamaIndex, acts as the MCP Host. It needs an embedded MCP Client component.

- Mediation: When the LLM (managed by the framework) decides to use a tool that corresponds to an MCP tool, your code intercepts this, uses the MCP Client to call the appropriate MCP Server, receives the result, and formats it back for the framework and LLM.

- Utilities: Look for specific libraries or utilities designed for this purpose. For example, the

awesome-mcp-serverslist mentionslangchain-mcpandmcp-langchain-ts-clientwhich aim to simplify this integration. - SDKs: The core MCP SDKs provide the building blocks (

ClientSession,call_tool, etc.) needed to make these calls from within your framework-based application.

The key is that the framework orchestrates the LLM, while the MCP client handles the standardized communication with the external tool servers.

f) How can I use MCP locally?

Using MCP locally is straightforward and a common use case:

- Run Servers Locally: Many MCP servers (especially reference ones like Filesystem, Git, SQLite, PostgreSQL connecting to a local DB) are designed to run directly on your machine. You can typically start them using

npx(for Node.js servers) oruvx/pip(for Python servers), or via Docker.# Example: Run the filesystem server locally npx -y @modelcontextprotocol/server-filesystem /path/to/your/project # Example: Run the git server locally (using uvx) uvx mcp-server-git --repository /path/to/your/repo - Configure Local Clients: MCP Host applications running locally (like Claude Desktop or a custom app) can be configured to connect to these locally running servers. The configuration usually involves specifying the command to start the server (as shown above).

- Development & Testing: Running servers locally is ideal for development, testing, and accessing local resources like codebases or databases without exposing them externally.

- Bridging (Optional): If you need a remote client to access a local server, tools like

ngrokcombined withSupergatewaycan bridge the connection, exposing your local stdio-based server over the network via SSE or WebSockets.

MCP offers a powerful, standardized approach to expanding the capabilities of AI. By understanding its architecture and how it improves upon older methods, developers can both contribute to and leverage this growing ecosystem to build more sophisticated and functional AI applications. Explore the official documentation at modelcontextprotocol.io to dive deeper.