Next-Gen AI: Cognitive Primitives

Discover the essential skills (reasoning, planning, tool use) driving advanced AI development across major labs and enabling agentic systems.

The landscape of artificial intelligence is rapidly evolving, but a fascinating pattern is emerging. Leading AI research labs notably OpenAI, Anthropic, and Google DeepMind appear to be independently converging on a shared set of fundamental capabilities for their most advanced LLMs. Despite differing architectures, training methods, these organizations are increasingly building AI around similar core functions often referred to as cognitive primitives.

These primitives:reasoning, planning, tool use, search, code execution, and computer control, represent the essential building blocks for more general purpose artificial intelligence. They are moving AI beyond simple pattern matching and text prediction towards systems that can understand complex requests, formulate plans, interact with external tools, and execute tasks autonomously.

This post explores this convergence, delves into what these cognitive primitives are, examines how protocols like the Model Context Protocol (MCP) help operationalize them, and discusses the trajectory toward unified AI agents.

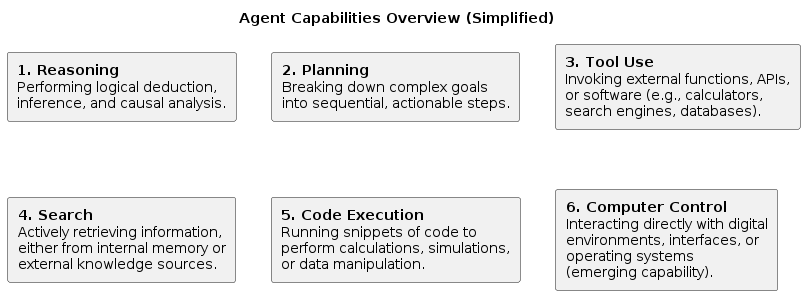

What are Cognitive Primitives?

In the context of Large Language Models (LLMs) and AI systems, cognitive primitives are fundamental, modular operations or capabilities that serve as the building blocks for higher-level reasoning, problem-solving, and intelligent behavior. Think of them as the basic mental toolkit for an AI.

Key cognitive primitives that are becoming central to modern AI include:

- Reasoning: Performing logical deduction, inference, and causal analysis.

- Planning: Breaking down complex goals into sequential, actionable steps.

- Tool Use: Invoking external functions, APIs, or software (e.g., calculators, search engines, databases).

- Search: Actively retrieving information, either from internal memory or external knowledge sources.

- Code Execution: Running snippets of code to perform calculations, simulations, or data manipulation.

- Computer Control: Interacting directly with digital environments, interfaces, or operating systems (emerging capability).

These primitives allow AI systems to tackle problems in a more structured, explainable, and flexible manner, much like humans combine basic cognitive skills to handle diverse challenges.

Reasoning LLMs are Agents

Reasoning LLMs are agents because they:

- Perceive (via user input and environment observation),

- Reason (deliberate and plan actions),

- Act (execute tool calls, API interactions, or other operations),

- Remember (track context and history),

- and do so autonomously to achieve user-defined goals.

While each major AI lab pursues its own research directions, the systems they are developing increasingly rely on integrating these core primitives.

- OpenAI's GPT models demonstrate strong reasoning, code execution, and tool use (via function calling).

- Anthropic's Claude models emphasize reasoning, safety, and are also incorporating tool use capabilities.

- Google DeepMind's Gemini is designed as a multi-modal model integrating text, code, image understanding, and potentially planning and tool interaction features.

This convergence isn't necessarily collusion, but rather a recognition that these specific capabilities are crucial for overcoming the limitations of earlier models and achieving more robust, general intelligence. The ultimate goal appears to be creating unified AI agents single systems that can perceive, reason, and act effectively across multiple domains and modalities.

How LLMs Leverage Primitives

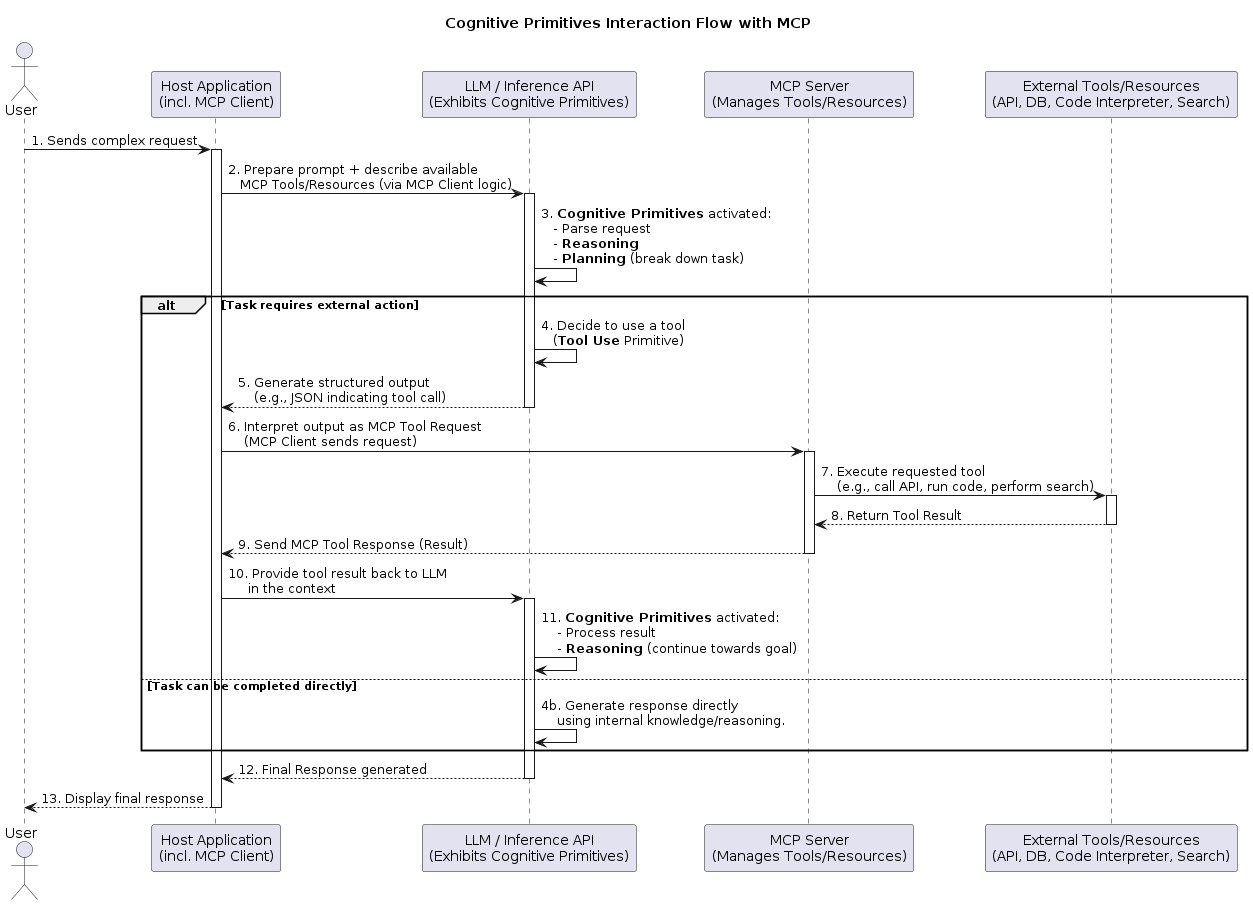

LLMs are trained or fine-tuned to recognize when a specific task requires invoking one or more cognitive primitives. When faced with a complex problem:

- The LLM might first parse the input to understand the core requirements.

- It could then plan a sequence of steps.

- This plan might involve searching for information, using a tool (like a calculator or API), or executing code.

- The results from these actions are fed back into the model's context, allowing it to continue reasoning towards a final solution.

Research suggests that using simpler, more atomic primitives often leads to better performance, particularly on complex tasks, as they provide clearer, more manageable logical steps for the model.

Connecting Primitives to Action: The Role of Protocols (like MCP)

Cognitive primitives like "tool use" or "search" are concepts. To make them real, AI systems need a way to actually interact with the outside world or external software. This is where interaction protocols come into play.

The Model Context Protocol (MCP) is one example of a standardized way to structure these interactions. MCP defines its own set of protocol-level primitives:

- Tools: Allow the LLM to request the execution of external functions (e.g., call an API, run code).

- Resources: Provide structured data (e.g., files, database results) for the LLM to reference.

- Prompts: Offer predefined templates to guide the LLM's behavior consistently.

Relationship: In an MCP-enabled system, the abstract cognitive primitive of "tool use" is implemented by the LLM issuing a structured call to an MCP Tool primitive. Similarly, "search" might be implemented via a specific Tool designed for web or database querying. MCP provides the standardized action mechanism for the LLM to exercise its cognitive capabilities in the real world.

LLM Compatibility: Tool Use is Key

Crucially, the LLM itself doesn't need to have built-in knowledge of the MCP protocol. What it does need is to be "tool-usage compatible". This means the LLM must be able to:

- Understand descriptions of available tools and resources provided in its context/prompt.

- Generate structured output (often JSON or a specific function call format) when it decides to invoke a tool.

Modern LLMs like GPT-4, Claude 3, and Gemini are generally designed with this capability. The application hosting the LLM (using an MCP Client, for instance) handles the protocol details: it presents the tools to the LLM, interprets the LLM's request to use a tool, executes the action via the MCP Server, and feeds the result back to the LLM.

The Road Ahead: Unified AI Agents

The convergence on core cognitive primitives, enabled by interaction protocols, is paving the way for the next generation of AI. We are moving beyond models that just predict text towards integrated agents that can:

- Understand complex, multi-step instructions.

- Plan and execute tasks autonomously.

- Interact dynamically with software and information sources.

- Learn and adapt from their interactions.

These advancements promise powerful new applications across science, business, and daily life, while also raising important considerations about safety, control, and societal impact.

Key Takeaways

- Leading AI labs are converging on a core set of 'cognitive primitives' (reasoning, planning, tool use, etc.) as the foundation for advanced AI.

- These primitives act as modular building blocks for complex problem-solving and agentic behavior.

- Protocols like MCP provide standardized ways for LLMs to operationalize these primitives by interacting with external tools and resources.

- LLMs don't need to know the protocol itself, but must be 'tool-usage compatible' – able to understand tool descriptions and request their use via structured output.

- This trend points towards the development of unified AI agents capable of perception, reasoning, and action across diverse domains.

The journey towards truly general AI is complex, but the emergence of these shared cognitive building blocks marks a significant and exciting step forward.

References

- https://wandb.ai/onlineinference/mcp/reports/The-Model-Context-Protocol-MCP-by-Anthropic-Origins-functionality-and-impact--VmlldzoxMTY5NDI4MQ

- https://www.ikangai.com/model-context-protocol-architecture/

- https://www.anthropic.com/claude/sonnet

- https://docs.aws.amazon.com/bedrock/latest/userguide/bedrock-runtime_example_bedrock-runtime_ConverseStream_AnthropicClaudeReasoning_section.html

- https://docs.anthropic.com/en/docs/agents-and-tools/claude-code/overview

- https://aclanthology.org/2024.lrec-main.532/

- https://aclanthology.org/2024.lrec-main.532.pdf